A “data-lake” is a centralized repository that allows you to store all your structured and unstructured data at any scale. High-Performance Computing (HPC) is the ability to process data and perform complex calculations at high speeds. What about combining large amounts of data with powerful computing resources? That would be the perfect blend. The convergence of the two fields - HPC and Big Data - is currently taking place, driven by the need for faster and higher quality results. Organizations are seeking to obtain maximum value from their data, and this demands faster, more scalable and more cost-effective IT infrastructure.

ESCAPE is working towards to make this possible, by addressing the challenges of open science for particle, astroparticle and nuclear physic communities. ESCAPE is connecting the ESCAPE DIOS (Data Infrastructure for Open Science) service, an infrastructure adapted to Exabyte-scale needs of large science projects, with the powerful computing resources from the European HPC centers.

Supporting high-energy physics in big data management challenges

The Compact Muon Solenoid (CMS) experiment was the chosen one to become the first success story of this “promising combination”. CMS is a high-energy physics experiment, part of the Large Hadron Collider (LHC) at CERN, in Switzerland. CMS is designed to see a wide range of particles and phenomena produced in high-energy collisions in the LHC. Like a cylindrical onion, different layers of detector stop and measure the different particles, and use this key data to build up a picture of events at the heart of the collision.

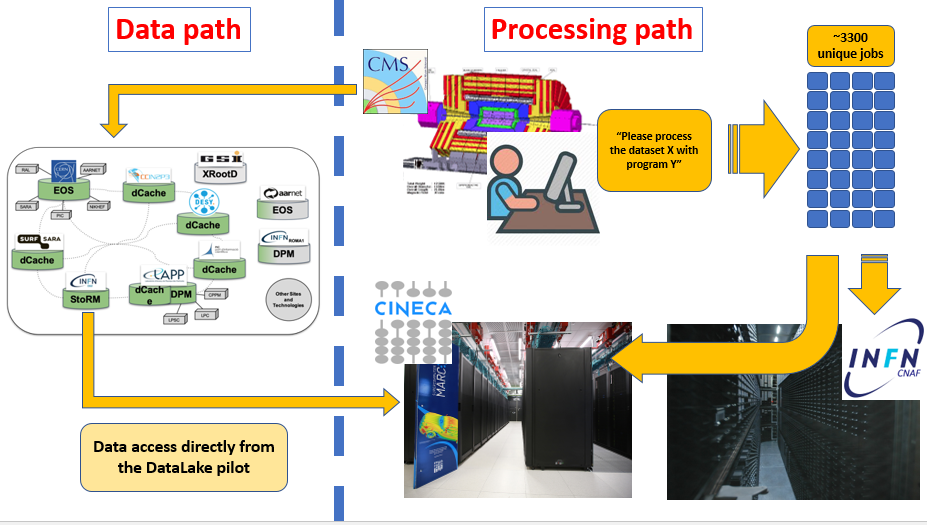

For this ESCAPE experiment, several analysis workflows have been submitted on the CMS distributed computing infrastructure and have reached nodes on the CINECA Marconi A2 HPC system (Marconi is classified in Top500 list among the most powerful supercomputer, rank 19 in the November 2019 list), which is made available thanks to the collaboration between INFN (National Institute for Nuclear Physics) and CINECA (the most powerful supercomputing centre for scientific research and the PRACE node in Italy).

Usually, the processing nodes at CINECA are not able to access data from the ESCAPE “data-lake” pilot. The ESCAPE DIOS pilot has enabled the connection between the ESCAPE data and systems at CINECA.

Figure 1 The schematic data and processing flows.

The processing has been executed using a real CMS input dataset, ingested by the ESCAPE DIOS pilot system at CNAF, thus proving the capabilities of the ESCAPE model and tools to enable large scale data intensive computing tasks. With the jobs dispatched to both INFN and CINECA, the task was 100% successful.

Figure 2 CMS Task Monitoring

By having ESCAPE DIOS, CMS is now able to allow its users to access the scientific data deployed on the data-lake. The model can be readily exported to other HPC resources as available in Europe and worldwide, and to other scientific endeavours; it shows one of the first examples of interoperability between the Big Data and the HPC realms as expected in the European Open Science Cloud (EOSC) framework.

SUBSCRIBE TO ESCAPE NEWSLETTER TO RECEIVE UPDATES ABOUT ESCAPE DIOS AND THIS USE-CASE

Views

23,700