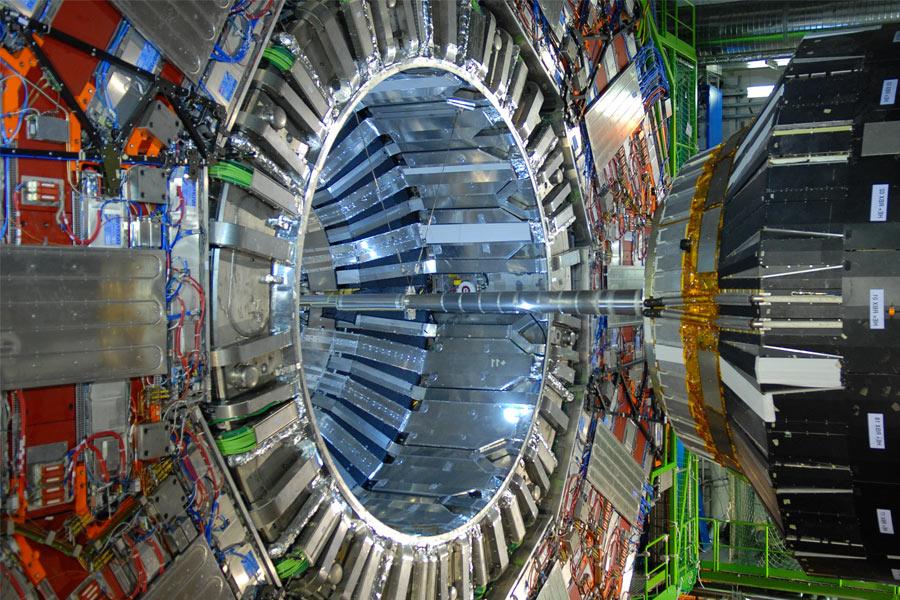

Resource-wise, the way we understood physics computing faced a phenomenal new challenge in preparation for Large Hadron Collider (LHC) computing. More than two decades ago, computing and its associated infrastructure could no longer be centralised at a single laboratory, and the distributed computing paradigm started to take shape. The Worldwide LHC Computing Grid (WLCG) has been a critical backbone since the LHC started to enable science worldwide, confirming its revolutionary ideas on distributed computing, well before the word cloud infiltrated the world. Today, we are again at another critical juncture.

The High-Luminosity LHC (HL-LHC) program will produce large amounts of real and simulated data, whose storage, management and funding cannot be achieved by improving the current computing models of the LHC experiments. A major R&D program has been initiated, embedded within the WLCG framework – the Data Organisation, Management and Access (DOMA) project. The objective of the DOMA project has been to discuss and prototype the merits of novel ideas about distributed storage, data processing, data transfers, protocols, and networking, within the context of expected manpower and funding available at the sites.

However, this change of scale in scientific computing has arrived in a broad number of sciences. Parallel science projects in Astrophysics, Cosmology and Neutrino physics reached (or are planning to reach) similar scales in data volumes and data processing needs: SKA, DUNE, Belle-II, CTA, EGO, FAIR, LSST. There is a huge, and probably unique, opportunity to align among sciences, and understand possible ways to share the approach to computing services, tools and knowledge. This would increase the scientific outcome from the computing infrastructures that are common to many; disk and tape storage, processing CPU/GPU and networks. In this aspect CERN is leading a work package within the EU-funded ESCAPE project, a spearhead activity to investigate the feasibility of a large-scale scientific computing infrastructure common for open science.

We are prototyping a common infrastructure together, with the aforementioned experiments and the ESCAPE partner sites*, to implement the WLCG-datalake concept; fewer large centres manage the long-term data, while processing needs are managed through streaming, caching, and related tools. This allows for decreasing the cost of managing and operating large complex storage systems, and reducing complexity for the experiments’ computing models. We are relying on some of the tools currently used in WLCG and developed at CERN like RUCIO for Data Management, FTS for File Transfer Services, Hammercloud for sites and workloads testing and Perfsonar to monitor the network. These are building blocks of this prototype that will bring together storage resources from the ESCAPE partner sites*. First real data from different disciplines is currently arriving in the datalake pilot, and first workload implementations and data access are taking place (see event page).

*CERN, Annecy (LAPP), Lyon (IN2P3), Hamburg (DESY), Barcelona (PIC), Darmstadt (GSI), Bologna/Napoli/Roma (INFN), Amsterdam (SURF-SARA) and Göttingen (RuG)

Source: Original article published on CERN Computing BlogViews

22,250